The Hogwild! idea

the Diploma thesis learning – April 15, 2021

I have been working on a project based on the paper “HOGWILD!: A Lock-Free Approach to Parallelizing Stochastic Gradient Descent“.

https://papers.nips.cc/paper/2011/file/218a0aefd1d1a4be65601cc6ddc1520e-Paper.pdf

First iteration was reading the paper and preparing the logical model how Stochastic Gradient Descent, a.k.a. SGD, works and how it would be possible to paralelize.

Creating the Julia project

@everywhere function run_SSP_worker(batch, server, channel, model, ps, loss)

id = myid()

println("$(id) has started!")

while fetch(channel) != nothing

println("$(myid()) is bamboozled!")

xs = take!(channel)

println("$(myid()) has\n $(ps)\n $(xs)")

data = batch()

# WHY AM I NOT ALLOWED TO REMOVE MODEL HERE??

gs = calculate_gradient(loss, model, ps, xs, data)

information = Information(id, gs)

println("$(data)\n # $(xs)\n # $(gs)")

@sync put!(server, information)

end

endMath code is ![]()

At first, we sample ![]() in the

in the ![]() (

(![]() is odd) equidistant points around

is odd) equidistant points around ![]() :

:

![]()

where

Then we interpolate points

(1)

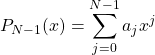

Its coefficients

(2) ![]()

Here are references to existing equations: (1), (2).

Here is reference to non-existing equation (??).